NLP for learners – A simple code of text parsing with Stanza

Stanza allows you to parse sentences using Python. It is available in many languages and can provide a variety of information about a sentence.

To use stanza, you need to install it in advance.

UPOS

One of the basic information available is the part of speech (UPOS).

UPOS are represented by an abbreviation. They have the following meanings.

| 1. | ADJ | adjective |

| 2. | ADJ | adposition |

| 3. | ADV | adverb |

| 4. | AUX | auxiliary |

| 5. | CCONJ | coordinating conjunction |

| 6. | DET | determiner |

| 7. | INTJ | interjection |

| 8. | NOUN | noun |

| 9. | NUM | numeral |

| 10. | PART | particle |

| 11. | PRON | pronoun |

| 12. | PROPN | proper noun |

| 13. | PUNCT | punctuation |

| 14. | SCONJ | subordinating conjunction |

| 15. | SYM | symbol |

| 16. | VERB | verb |

| 17. | X | other |

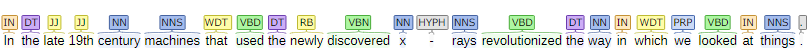

XPOS

Compared to UPOS, XPOS provides more detailed parts of speech information.

They mean the following.

| 1. | CC | Coordinating conjunction |

| 2. | CD | Cardinal number |

| 3. | DT | Determiner |

| 4. | EX | Existential there |

| 5. | FW | Foreign word |

| 6. | IN | Preposition or subordinating conjunction |

| 7. | JJ | Adjective |

| 8. | JJR | Adjective, comparative |

| 9. | JJS | Adjective, superlative |

| 10. | LS | List item marker |

| 11. | MD | Modal |

| 12. | NN | Noun, singular or mass |

| 13. | NNS | Noun, plural |

| 14. | NNP | Proper noun, singular |

| 15. | NNPS | Proper noun, plural |

| 16. | PDT | Predeterminer |

| 17. | POS | Possessive ending |

| 18. | PRP | Personal pronoun |

| 19. | PRP$ | Possessive pronoun |

| 20. | RB | Adverb |

| 21. | RBR | Adverb, comparative |

| 22. | RBS | Adverb, superlative |

| 23. | RP | Particle |

| 24. | SYM | Symbol |

| 25. | TO | to |

| 26. | UH | Interjection |

| 27. | VB | Verb, base form |

| 28. | VBD | Verb, past tense |

| 29. | VBG | Verb, gerund or present participle |

| 30. | VBN | Verb, past participle |

| 31. | VBP | Verb, non-3rd person singular present |

| 32. | VBZ | Verb, 3rd person singular present |

| 33. | WDT | Wh-determiner |

| 34. | WP | Wh-pronoun |

| 35. | WP$ | Possessive wh-pronoun |

| 36. | WRB | Wh-adverb |

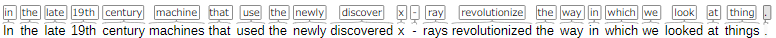

Lemma

Lemma is a dictionary headword. For example, take changes to takes, took, taken, taking, but these lemmas are take.

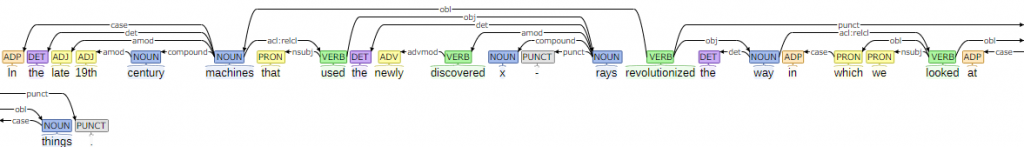

Dependency

You can find out which word a certain word depends on.

Extraction

with io.open('articles_u.txt', encoding='utf-8') as f:

text = f.read()

texts = text.replace('eos', '.\n').splitlines()Reads the text. The text file has the symbol eos at the end of a sentence, so replace it with a period.

stanza.download('en')Download the English model with stanza.download('en'). The model should be larger than 1Gbyte. Once downloaded, download is skipped the second time. The model is needed to parse the text.

nlp = stanza.Pipeline(lang='en')Specifies English as the language of the pipeline. The pipeline can be imagined as a device that gets the text and returns the parsing.

for line in range(3):Here we will parse the first three sentences of the list texts.

texts[0] = 'i expect all of you to be here five minutes before the test begins without fail .'

texts[1] = 'the poor old woman had her bag stolen again .'

texts[2] = 'a rush-hour traffic jam delayed my arrival by two hours .'

Let’s do some parsing.

doc = nlp(texts[line])Parses each sentence and stores it in the object doc.

doc =

[

{

"id": 1,

"text": "i",

"lemma": "i",

"upos": "PRON",

"xpos": "PRP",

"feats": "Case=Nom|Number=Sing|Person=1|PronType=Prs",

"head": 2,

"deprel": "nsubj",

"misc": "start_char=0|end_char=1",

"ner": "O"

},

{

"id": 2,

"text": "expect",

"lemma": "expect",

"upos": "VERB",

"xpos": "VBP",

"feats": "Mood=Ind|Tense=Pres|VerbForm=Fin",

"head": 0,

"deprel": "root",

"misc": "start_char=2|end_char=8",

"ner": "O"

},

{

"id": 3,

"text": "all",

"lemma": "all",

"upos": "DET",

"xpos": "DT",

"head": 2,

"deprel": "obj",

"misc": "start_char=9|end_char=12",

"ner": "O"

}, ......Converts object information into a list.

for word in doc.sentences[0].words:

char.append(word.text)

lemma.append(word.lemma)

pos.append(word.pos)

xpos.append(word.xpos)

deprel.append(word.deprel)Extract information about each word from the object using a for statement.

for word in doc.sentences[0].words:

head.extend([lemma[word.head-1] if word.head > 0 else "root"])head is the id of the word it depends on. See the object doc. For example, 'all’ depends on 'expect', but the head has an id of 2.

Since we don’t know which word an id actually depends on, we use in-list notation to get the corresponding lemma for the id. For example, if the word is 'all', then the head will contain the word 'expect'.

If the head is 0, the head is set to 'root', indicating that there is no other word that depends on it.

chars.append(char)

lemmas.append(lemma)

poses.append(pos)

xposes.append(xpos)

heads.append(head)

deprels.append(deprel)Add the results obtained for each statement to the list.

chars =

[['i', 'expect', 'all', 'of', 'you', 'to', 'be', 'here', 'five', 'minutes', 'before', 'the', 'test', 'begins', 'without', 'fail', '.'],

['the', 'poor', 'old', 'woman', 'had', 'her', 'bag', 'stolen', 'again', '.'],

['a', 'rush', '-', 'hour', 'traffic', 'jam', 'delayed', 'my', 'arrival', 'by', 'two', 'hours', '.']]

lemmas =

[['i', 'expect', 'all', 'of', 'you', 'to', 'be', 'here', 'five', 'minute', 'before', 'the', 'test', 'begin', 'without', 'fail', '.'],

['the', 'poor', 'old', 'woman', 'have', 'she', 'bag', 'steal', 'again', '.'],

['a', 'rush', '-', 'hour', 'traffic', 'jam', 'delay', 'my', 'arrival', 'by', 'two', 'hour', '.']]

poses =

[['PRON', 'VERB', 'DET', 'ADP', 'PRON', 'PART', 'AUX', 'ADV', 'NUM', 'NOUN', 'SCONJ', 'DET', 'NOUN', 'VERB', 'ADP', 'NOUN', 'PUNCT'],

['DET', 'ADJ', 'ADJ', 'NOUN', 'VERB', 'PRON', 'NOUN', 'VERB', 'ADV', 'PUNCT'],

['DET', 'NOUN', 'PUNCT', 'NOUN', 'NOUN', 'NOUN', 'VERB', 'PRON', 'NOUN', 'ADP', 'NUM', 'NOUN', 'PUNCT']]

xposes =

[['PRP', 'VBP', 'DT', 'IN', 'PRP', 'TO', 'VB', 'RB', 'CD', 'NNS', 'IN', 'DT', 'NN', 'VBZ', 'IN', 'NN', '.'],

['DT', 'JJ', 'JJ', 'NN', 'VBD', 'PRP$', 'NN', 'VBN', 'RB', '.'],

['DT', 'NN', 'HYPH', 'NN', 'NN', 'NN', 'VBD', 'PRP$', 'NN', 'IN', 'CD', 'NNS', '.']]

heads =

[['expect', 'root', 'expect', 'you', 'all', 'here', 'here', 'expect', 'minute', 'here', 'begin', 'test', 'begin', 'here', 'fail', 'begin', 'expect'],

['woman', 'woman', 'woman', 'have', 'root', 'bag', 'have', 'have', 'steal', 'have'],

['jam', 'hour', 'hour', 'jam', 'jam', 'delay', 'root', 'arrival', 'delay', 'hour', 'hour', 'delay', 'delay']]

deprels =

[['nsubj', 'root', 'obj', 'case', 'nmod', 'mark', 'cop', 'xcomp', 'nummod', 'obl:tmod', 'mark', 'det', 'nsubj', 'advcl', 'case', 'obl', 'punct'],

['det', 'amod', 'amod', 'nsubj', 'root', 'nmod:poss', 'obj', 'xcomp', 'advmod', 'punct'],

['det', 'compound', 'punct', 'compound', 'compound', 'nsubj', 'root', 'nmod:poss', 'obj', 'case', 'nummod', 'obl', 'punct']]For information on deprel, please refer to the manual.

Here is the overall code.

import numpy as np

import sys

import io

import os

import stanza

#read the text

with io.open('articles_u.txt', encoding='utf-8') as f:

text = f.read()

texts = text.replace('eos', '.\n').splitlines()

#load stanza

stanza.download('en')

nlp = stanza.Pipeline(lang='en')

chars = []

lemmas = []

poses = []

xposes = []

heads = []

deprels = []

for line in range(3):

char = []

lemma = []

pos = []

xpos = []

head = []

deprel = []

print('analyzing: '+str(line+1)+' / '+str(len(texts)), end='\r')

doc = nlp(texts[line])

for word in doc.sentences[0].words:

char.append(word.text)

lemma.append(word.lemma)

pos.append(word.pos)

xpos.append(word.xpos)

deprel.append(word.deprel)

for word in doc.sentences[0].words:

head.extend([lemma[word.head-1] if word.head > 0 else "root"])

chars.append(char)

lemmas.append(lemma)

poses.append(pos)

xposes.append(xpos)

heads.append(head)

deprels.append(deprel)SNSでシェア